There is a specific kind of fatigue that comes from sitting in a node-based workflow at 2:00 AM, adjusting a single weight by 0.05 just to see if the specular highlight on a subject's nose settles into the skin or sits on top of it like plastic. Your nerves a bit shot from too much coffee... waiting for the next generation... "Did I get it right? Is this the right direction?"

At Arcworks, we’ve been deep in the trenches testing a relatively complicated local build of Stability Matrix and ComfyUI, pushing SDXL to its absolute limit. The goal wasn’t just "realism"—the internet is already flooded with smooth, airbrushed AI faces... you see them everywhere these days.

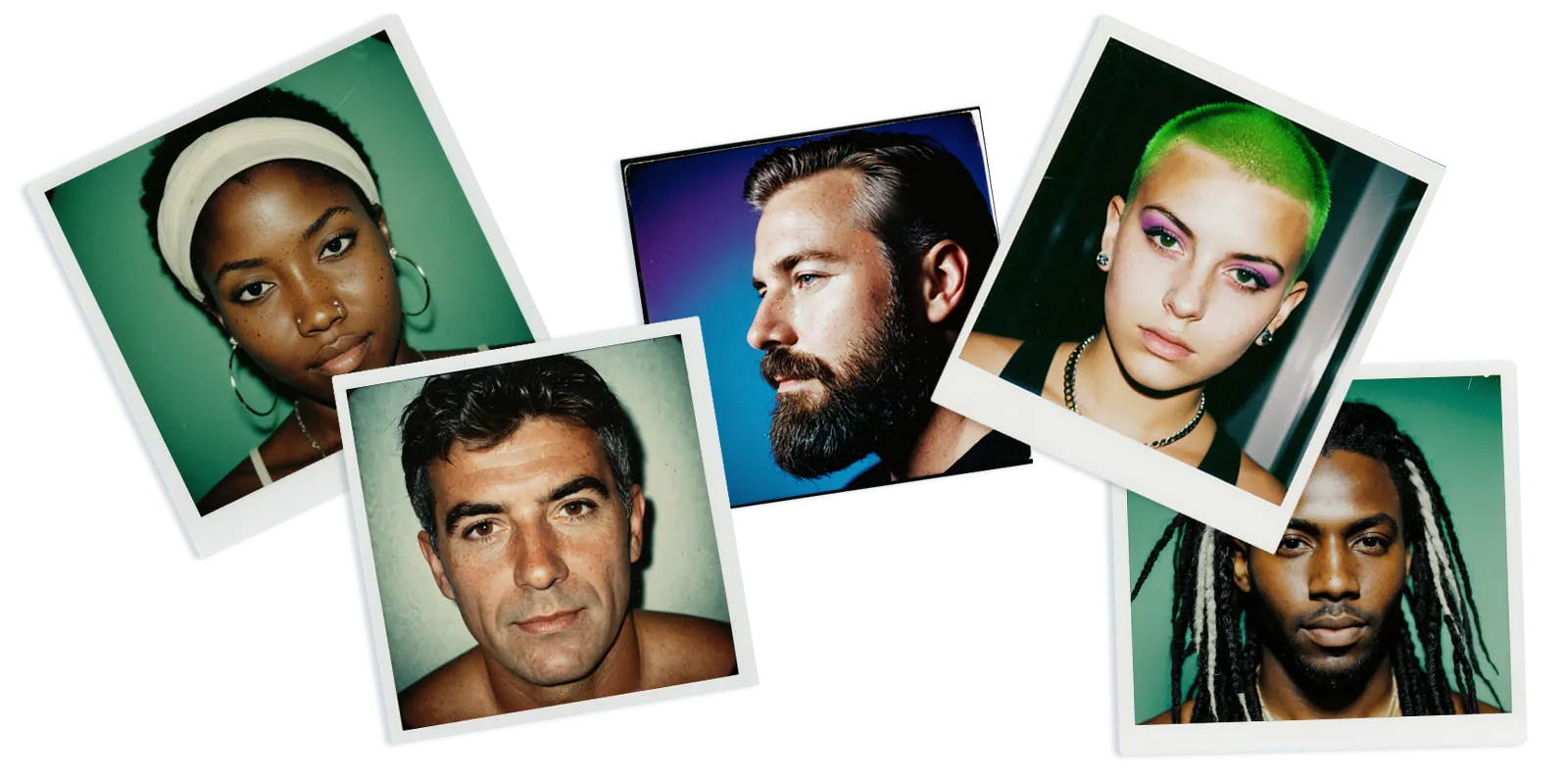

Our goal was authenticity, testing limits, and slop-avoidance.

For this experiment we wanted the grit of a Polaroid, the unevenness of freckles, and the "human" imperfections that tell a story. Telling the story the right way with the right visuals is something that we believe is very important at Arcworks.

So... there is a benefit to having a beast of a workstation with a dedicated GPU that can crank through iterations - but this workflow is something that is possible to create without our specific setup. It's about robust prompt engineering, understanding the ecosystem you're in, and what limitations it has.

Let's continue...

Engineering the Vibe

Creating these portraits wasn't a "one-click" miracle. It was a number of late-night iterative marathons, spending weeks building custom workflows that prioritize skin texture over "perfection." A special shout-out to Radial Coffee's Cosa Nueva flagship blend for the late night support... straight up lifesaver.

Using SDXL within ComfyUI allows us to treat the generation process like a physical darkroom. We aren't just prompting; we are:

- Balancing LoRAs: Layering subtle film-grain models with high-fidelity anatomy weights.

- Negative Prompt Sculpting: Removing the "digital sheen" that often screws up and betrays AI-generated content.

- Node-Based Control: Using specific upscaling and aworkflows to ensure that when you look at a portrait, you see pores and peach fuzz, not smoothed-out pixels.

- Adding The Third Dimension: Depth Mapping & Displacement

Depth and Tilt

The "Lab" isn't just about the 2D surface. To push these portraits into the realm of narrative storytelling, we’ve integrated Depth Mapping and Z-buffer extraction into our ComfyUI workflows as well. By generating high-contrast displacement maps alongside the RGB image, we can treat the portrait as a 3D object.

This allows for:

High-End Displacement Animation: Using the depth data to create "living" portraits with subtle parallax and micro-movements (cool, right?).

Dynamic Lighting Post-Generation: Relighting the subject in a 3D environment by using the map to dictate how shadows should fall across the geometry of the face.

Cinematic Depth of Field: Working to acquire total control over the focal plane, allowing for a rack-focus effect that feels optical rather than digital.

Why Do This? (The Creative Use Case)

A colleague that I was talking recently asked: "If you want a portrait, why not just hire a photographer?" when I was explaining the process. The answer is simple: Speed to Vision. These portraits aren't the final destination; they help build the roadmap. In the Arcworks ecosystem, these digital personas serve critical roles:

Narrative Storyboarding: We can "cast" a protagonist for a brand story in minutes, testing how they look under different lighting or emotional states before a production budget is even touched. Sometimes text isn't enough.

Persona Development: When we build a brand, we don't just write a "target audience" bio. We create them. We see the jewelry they wear, the way they do their eyeliner, and the intensity in their eyes.

Creative Support: These images act as high-level "mood boards" for our human collaborators. A makeup artist or a lighting tech can look at a journalist-style portrait and instantly understand the vibe we’re chasing.

The Human Element?

It’s important to draw a line in the sand: This is a tool, not a replacement.

The images you see here are the result of human hours spent tweaking code and parameters. They represent a kind of a "new frontier" in creative function and support. We believe that by using AI to handle some of the initial heavy lifting of visualization, we free up our human photographers, models, and directors to do what they do best: bring the soul.

We’ve moved past the "uncanny valley." We’re now in the "uncanny studio," and the possibilities for narrative storytelling are just getting started.